What is ChatGPT exactly?

ChatGPT is a chat interface based on the GPT-series Large Language Models (LLMs) by OpenAI. You can ask it whatever you want and give it commands to generate structured information. Think of use cases like asking a question, having a piece of text translated, making calculations based on a problem description, having code generated for a feature you’re thinking of, and the list goes on…

But how is it doing all of this? Arman, how does ChatGPT work?

So, ChatGPT is a product made by OpenAI based on its GPT3.5 series. It’s a Large Language Model (LLM) with 20 billion parameters, specifically trained (read: by hand) to model conversations. The model consists of Natural Language Understanding (NLU) to understand input and Natural Language Generation (NLG) to formulate a response. Especially that last bit, NLG, has made significant steps forward with the introduction of ChatGPT, better enabling “generative” AI use cases.

Hold up, LLMs, generative AI, parameters, what’s that all about?

Simply put, it's an algorithm that recognizes, summarizes, translates, predicts, and generates text. It can do this due to the huge data sets used to train the model. One of the ways to express its capacity to learn is by quantifying the number of parameters. A parameter is a variable that makes up that part of the model that was able to learn from historical data provided in the underlying data set. So, the number of parameters says something about how many nuances a model can absorb. So far, we’ve seen a strong correlation between the number of parameters a model has and its ability to resemble and generate human-like responses and behavior

All right, so it's a chatbot that understands nuance and can generate its own responses. What do you see as the major advantages and disadvantages of this development?

Aside from the opportunities and powerful new use cases this technology brings, I see the hype surrounding it as the biggest advantage. The extraordinary enthusiasm brings an increase in curiosity and demand for technology in Generative AI. It puts the power of conversational AI on public display, and people love it. Ultimately, this will further drive innovation as more people and companies get involved.

There are some early downsides too. We’ve noticed that during the early stages of these types of developments, many people find it hard to poke through the noise and see the real business value. ChatGPT is perceived as an omniscient and omnipotent technology, which isn’t exactly true. It’s a conversational AI model that’s great at Natural Language Generation, but it’s not perfect. It makes mistakes. It is fine if its users understand that it occasionally gets things wrong and is not some panacea. This phase will pass, and what we will be left with will be extremely impressive.

ChatGPT vs Conversational AI

Human-like conversations sound like something that people are also trying to achieve with Conversational AI Cloud.

What is the difference between ChatGPT and Conversational AI Cloud?

Good question. OpenAI’s GPT series is a great application of the “new” transformer architecture that Google introduced to the world in 2017. Our Conversational AI Cloud has been using transformer models for years. The big difference is what a Conversational AI Platform has built around those models to truly deliver business value for an organization.

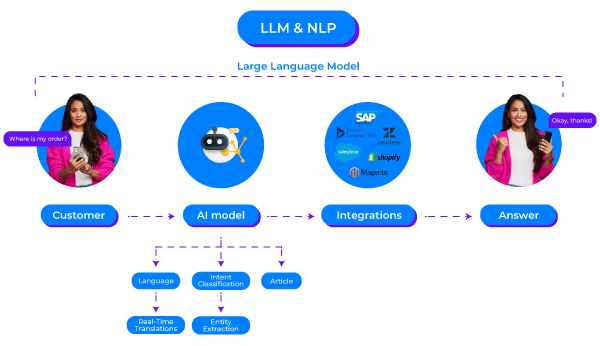

Conversational AI Cloud helps companies to address specific business problems by automating conversations. Natural Language Processing (NLP) is at the heart of that. When you need to automate many, and/or complex questions, there are limits to what you can do with rule-based models so employing AI models only makes sense.

In isolation, those models don’t do a lot. Businesses want to deploy AI on webchat, WhatsApp, and other channels. They want to test their models before deploying new versions. And they want to easily add or update content when they need to update their prices or add a new conversational flow, for example. They want to gain insights on their KPIs like model performance or deflection rate. And what about integrations with existing systems such as an OMS, CDP, CRM, or ERP? If you can’t execute real actions with your Conversational AI, such as fetching or updating records to give status updates to customers or change their information, then you can’t do much. ChatGPT cannot do any of this. But with Conversational AI Cloud you can. So, the models that we use “under the hood” complement a broad set of capabilities that form our full solution.

Answers given by Conversational AI Cloud can adapt to context but are vetted by copywriters in your firm to ensure responses are always factually correct, on brand, and carry the right tone of voice. So, depending on how well your environment is set up, the worst that can happen is that your AI, in some minor percentage of cases, will answer with “I don’t know the answer to that question”. Yet even that sentence will be phrased exactly how you want it to be phrased, and that flow is fully controlled. All these principles result in a low-risk profile for using Conversational AI Cloud to interact with your customers.

And this is also immediately the big difference between ChatGPT and Conversational AI Cloud. ChatGPT’s behavior is what you would call a black box. Ask the same exact question 5 times, and you will get five different answers (or not, you’ll never be sure). Sometimes, one of those answers will sound great but also factually incorrect. Your AI making up answers on the spot saves you an FTE, maybe two, in administrative work. But simultaneously, it decreases your capability to exercise control, be transparent and maintain quality. So, this risk profile of having generative AI output go directly to your customers is significantly higher (at least now that we’re still in the early days of this technology). We’ll get past that point sometime, but not today.

OK, so the audience and the use cases are very different?

That’s a good way to summarize it. Our models will keep on evolving as they have done in the past. And I’m sure that the LLMs currently being developed and released will greatly enhance the capabilities of our products. It won’t cannibalize it; these are two different, complementary things.

CM.com & ChatGPT

So, Arman, when you say these models will enhance our products what does that mean? And does CM.com have any plans around adopting these new models?

What role can ChatGPT play in our products?

We see a lot of opportunities to enhance our products and further enable the users of our products and the work they’ve been doing for the past years. Whether it’s for a conversation designer in Conversational AI Cloud, an agent in Mobile Service Cloud, or a marketeer in our Mobile Marketing Cloud. They are all generating content. Using generative AI to make content suggestions and having our customers run a final check will take a lot of work off their hands.

The important thing for us is that we don’t get carried away with the hype and integrate these technologies for the sake of incorporating them. We only want to implement features where significant value is added for our products and customers. We’re not about marketing-driven labeling of our capabilities with “AI-powered this, AI-powered that”. Generative AI and LLMs are mighty and make a lot of sense in many places, but we are conscious of the downsides and ensure that any new features we offer deliver quality and value.

So, tell us, what are we building right now, and what can we expect for the future?

I’m glad you asked. We have some stuff in the pipeline that really gets our hearts racing. Let's look at some of them.

Generating Conversational Content

Our goal is to achieve a faster go-live for our Conversational AI clients, whether it’s a new customer just getting started or an existing customer wanting to add a new conversational flow. Though we pride ourselves on ease of use, setting up intent models and article structures takes time. Time that can be significantly reduced if you, as a conversation designer, have what you could call a ghostwriter doing the heavy lifting for you. In the short term, that means prompting Conversational AI Cloud with information about your business. Say you’re a utility company. You can share that fact and provide some minor details about your products and services – after which the LLM will automatically generate questions and points of recognition that you may very well expect to receive from your customers. You can consider your output after the prompt as a draft, review it, test it, and publish it. Thinking about that content yourself takes up a lot of time you could have spent elsewhere.

We’re starting with intents, and we’ve already identified multiple other areas which will benefit from this type of solution. Over time we’ll add more and more of these capabilities to the CM.com portfolio.

LLMs for real-time natural language processing

One thing we’re always working on is our NLP models in our Conversational AI Cloud, and making agents’ lives easier in our Mobile Service Cloud. The goal here is to improve our existing NLP infrastructure with the power of LLMs by investigating topics like zero- and few-shot recognition. And again, here, we also focus on the value this will bring to our products. Stronger recognition models mean fewer escalations towards live agents, and more fine-grained routing when escalations do occur results in a lower average handling time per conversation. All of this will add significant value to the entire CM.com platform. Aside from that, we’re also looking at topics like spelling and grammar corrections to help conversation designers, contact center agents, and marketeers.

Personalization through sentiment

ChatGPT makes a good case for how sentimental context can improve replies. When negative sentiment is spotted, we can counterbalance by rephrasing static answers to be more empathetic or apologetic for the situation at hand. This concept can also work well for suggestions to contact center agents. Overall, this will create a better end-user experience resulting in a stronger bond between our customers and their end-users.

Summarizations and search capabilities

Recognition models don’t always perform well on long-form content. Summarizing long-form input before running it through our recognition models is a good way to increase the overall recognition rate and answer all incoming questions with higher confidence and precision.

When we organize handoffs to human agents, those agents will also be able to reduce their average handling time if they can read a summary of the conversation between the bot and the customer. The agents can get up to speed faster and provide a better and more timely first response. Any minor improvement in first response time is also correlated to a higher satisfaction rate, whether that’s expressed in NPS, CSAT, or CES.

The future starts today

To conclude, I’m curious what developments like ChatGPT, LLMs, and generative AI will bring to the table in the short term and what our more long-term expectations are after we’ve released these human-in-the-loop features you touched upon earlier.

What does ChatGPT mean for business today and in the future?

As a stand-alone product, ChatGPT is something of a personal assistant that can boost every employee’s individual productivity as supplementation on tools like search engines and wikis in the very short term (read: today)

As the access to the LLMs behind products like ChatGPT and Bard will find its way into the hands of developers, we’ll start seeing more specific implementations that solve problems on a business scale, rather than just individual productivity increases for knowledge workers. The human-in-the-loop use cases in products like our own are a perfect example.

In the long term, we’ll eventually get past the black-box uncertainties as professionals familiarize themselves with using these models to speak directly with consumers. Just like it did back in the day with NLP: it will take some time. People need to learn the right skill sets and metrics to work with these models at that level. For NLP, F1 scores, precision, recall, and comparable metrics for evaluation and training have become common knowledge. Today, you can find plenty of papers detailing similar metrics for NLG. The cascade from early adopters being comfortable with that to the early majority buyers will take some time. How much time exactly? I don’t think anyone has the answer to that.

In the meantime, we’re pretty hyped to release our first LLM features in March and continue pushing for even more advanced cases as the year progresses.

To be continued!